Online Motion Retargetting [Korean]

Kwang-Jin Choi and Hyeong-Seok Ko, Pacific Graphics '99

|

Abstract |

Online motion retargetting is a method to retarget the motion of a character to another in real-time. The technique is based on inverse rate control, which computes the changes in joint angles corresponding to the changes in end-effector position. While tracking the multiple end-effector trajectories of the original subject or character, our on-line motion retargetting also minimizes the joint angle differences by exploiting the kinematic redundancies of the animated model. This method can generalize a captured motion for another anthropometry to perform slightly different motion, while preserving the original motion characteristics. Because the above is done in on-line, a real-time performance can be mapped to other characters. Moreover, if the method is used interactively during motion capture session, the feedback of retargetted motion on the screen provides more chances to get satisfactory results. As a by-product, our algorithm can be used to reduce measurement errors in restoring captured motion. The data enhancement improves the accuracy in both joint angles and end-effector positions. Experiments prove that our retargetting algorithm preserves the high frequency details of the original motion quite accurately.

|

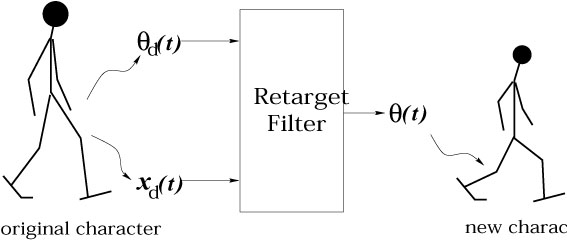

Schema |

The input is a stream of joint angle vectors qd of the measured subject during the source motion and another stream of the reference (or desired) end-effector positions xd of the animated character at discrete time ticks. The output is a stream of joint angle vectors q of the animated character during the destination motion at corresponding time ticks. The filter in the figure is causal. i.e., the output is calculated based on the current and immediately previous input values, but does not dependent on the future input. It explains why it is called on-line.

|

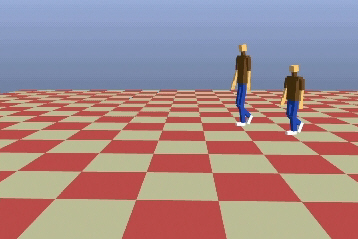

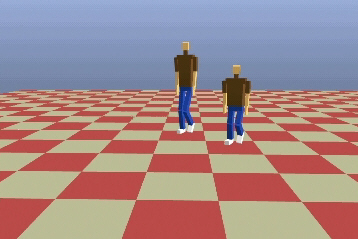

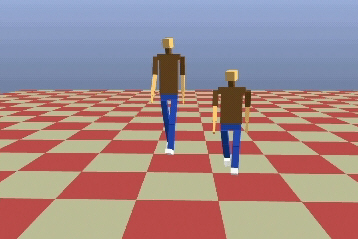

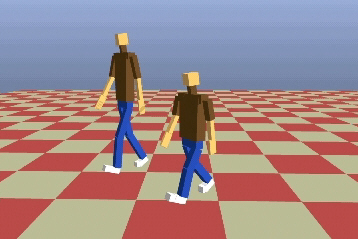

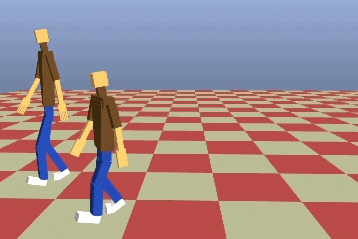

Example-1 : Walking motion |

In this example, the source motion was curved path locomotion. The walker took 13 steps along the path. The taller one in the snap shots above is the source character. The same foot trajectories were tracked by the smaller one mimicking the original motion.

|

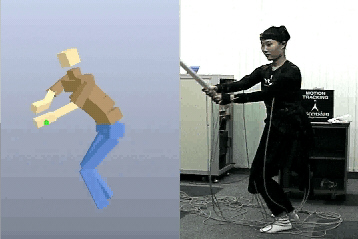

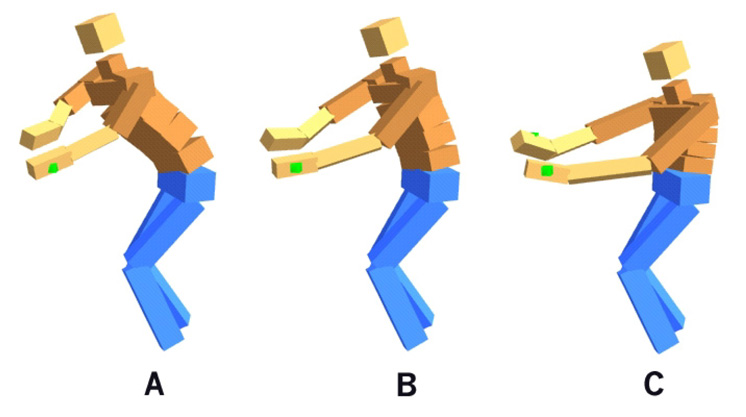

Example-2 : Bat-swing motion |

In this experiment, actual performance of a bat swing motion was processed by our OMR to produce the destination motion of three different characters. Since the body dimensions of Character B and the real performer are similar, the retargetted motion doesn't contain any noticeable difference from the source motion. In the case of Character A, however, we can see the waist is bent to lower the hit position, and the torso is shifted forward to account for the shorter arms. In the case of Character C, the torso is bent backward and makes a bigger twist to account for the longer arms and shorter torso. Snap shots were taken during the retargetted motions to clearly demonstrate the above adaptation for the anthropometric differences and shown in the above.

|

Animation |

Online Motion Retargetting (QuickTime Movie Format, 34M)

Online Motion Retargetting (Zipped QuickTime Movie Format, 19M)